The Principle of Narrative Realization: Constrained Semantic Permutation within Fixed Lexical Sets

Abstract

This article introduces the Principle of Narrative Realization (PNR), which posits that a coherent narrative represents an exceptionally rare permutation within the vast combinatorial space of its own Fixed Lexical Set. We formalize this by hypothesizing a heavy-tailed distribution of coherence scores, where the original text occupies a near-optimal configuration. The PNR is situated within a broader theoretical framework that connects combinatorial rarity to function, geometry, and cross-domain applicability. We integrate the principle with Predictive Landscape Semantics (PLS), defining coherence functionally as the capacity to maximize a receiver's predictive improvement. We then propose that this coherence manifests as structured, low-entropy geometric trajectories in semantic space, offering a computationally tractable proxy for analysis. The principle's generality is demonstrated through a parallel framework for music. This synthesis leads to theoretically grounded explorations of bidirectional realization—generating narratives from abstract geometric forms—and future AI-to-AI communication via resonant semantic shapes. By providing precise definitions, formal analysis, and a discussion of these interconnected layers, the work establishes a comprehensive research program for investigating the deep structure of meaningful information.

1 Introduction

Human language allows for a virtually infinite number of sentences, yet full-length, coherent narratives are comparatively rare. It is a familiar intuition that arbitrarily permuting the words of a novel results in incoherence. The Principle of Narrative Realization (PNR) proposed herein specifically investigates the foundational constraints imposed by the lexical inventory itself—constraints governed by principles of efficient, network-based encoding as detailed in the Theory of Minimal Description—on the possibility of forming any globally coherent narrative.

This paper develops that intuition into a formal conceptual framework. We propose the Principle of Narrative Realization (PNR): given a sufficiently large lexical multiset extracted from a coherent narrative, the distribution of coherence scores across all possible permutations is hypothesized to be heavy-tailed, meaning that only an exceedingly small number of permutations achieve coherence comparable to the original. The source text is posited to occupy (or be extremely close to) the global maximum of this coherence distribution. This formalization serves three critical functions:

- Precision: It moves us from the vague notion of "rarity" to a precise, falsifiable hypothesis about the shape of the coherence distribution (e.g., heavy-tailed) and the scaling of that rarity with text length.

- Generality: It provides a common language and mathematical framework to compare the role of constraint across different domains, such as literature and music.

- Generativity: It generates novel, non-obvious hypotheses that are empirically testable, such as the correspondence between coherence and specific geometric signatures in semantic space.

This paper, therefore, demonstrates how a formal model can transform a simple intuition into a productive scientific research program. The PNR provides a fundamental, pre-structural account of how the lexical inventory itself constrains the very possibility of forming such relations. It operates at the level of combinatorial potential, establishing the landscape within which higher-order structures can emerge.

Universe 00110000

2 Preliminaries and Definitions

2.1 Fixed Lexical Set (FLS)

Let S = ⟨w1, w2, …, wN⟩ be a source narrative of length N tokens. Its Fixed Lexical Set is the multiset

LS = { x1(n1), x2(n2), …, xk(nk) }

where xi is the i-th distinct word type, k is the total number of distinct word types in S, and ni is the frequency of the i-th type. Thus, ∑ki=1 ni = N. Any realized narrative R is a permutation of the tokens in LS that uses every token exactly once.

2.2 Narrative Coherence Metric

To quantify narrative coherence, we move beyond purely structural measures and align the PNR with the functional principles of Predictive Landscape Semantics (PLS). We define the narrative coherence score, fNC, not as a simple composite of linguistic features, but as an estimate of the expected predictive improvement (ΔQ) a realized narrative R would produce in a qualified receiver. This reframes coherence as a measure of a text's potential to achieve significant predictive improvement (ΔQ), thereby being meaningful in the PLS framework.

Formally, fNC(R) = E[ΔQ(R)]. Since this expectation is not directly computable, we operationalize fNC(R) by modeling it as a function MQ that takes a vector of computationally tractable linguistic features, F(R), as input to predict this value:

fNC(R) ≈ MQ( F(R) ), where F(R) = [NCsyn(R), NCsem(R), ...]

(1)

The components NCj(R) are no longer summands in a weighted average, but are now features in the vector F(R). Each feature serves as a proxy for structural properties of the text that are hypothesized to facilitate a receiver's predictive model-building process, thereby increasing the potential for a large ΔQ:

- NCsyn (Syntactic): Measures local grammatical well-formedness. A high score suggests lower processing cost for the receiver, enabling more efficient extraction of propositions needed to build a predictive model.

- NCsem (Semantic): Measures local topic continuity and propositional integrity. A high score suggests the text provides a consistent stream of evidence, allowing a receiver to efficiently build and extend a coherent semantic model of the narrative's world.

- NCref (Referential): Measures the success of entity tracking. A high score is crucial for maintaining a stable model of the characters and objects within the narrative, a prerequisite for accurate predictions about their actions.

- NCstr (Structural): Measures the presence of conventional high-level narrative components. A high score indicates the narrative follows a predictable schema, which guides the receiver's high-level expectations and model-building.

- NCprag (Pragmatic): Measures alignment with world knowledge. A high score ensures the narrative's propositions can be easily integrated into the receiver's existing predictive models of the world, minimizing belief-update conflicts.

In this formulation, the simple weights wj are replaced by the model MQ, which could be a learned function (e.g., a regression model) whose parameters capture the complex, potentially non-linear interactions between these features in producing a meaningful experience. The precise determination of the features in F(R) and the learning or parameterization of MQ itself represent significant challenges, deferred to future work (see Section 6.1). These features NCj(R) are selected as components of F(R) because they are hypothesized to reflect textual properties that directly enable a receiver to efficiently update their predictive landscape, thus maximizing the potential for a significant ΔQ. This approach provides a concrete, and theoretically grounded definition of the coherence landscape that the PNR explores, linking it directly to the cognitive function of meaning. While this sophisticated functional model is the theoretical ideal, a stricter, non-compensatory interpretation of these features will be used in Appendix A to formally prove a key bound under simplified conditions.

2.3 Notation Summary

Table 1: Notation Summary

| Symbol | Meaning |

|---|---|

| N | Total tokens in source narrative S |

| k | Distinct word types in S |

| xi | The i-th distinct word type |

| ni | Frequency of the i-th word type xi |

| LS | Fixed Lexical Set derived from S |

| R | A realized narrative (permutation of LS) |

| fNC(·) | Composite coherence score (Eq. (1)) |

| τ | General coherence threshold |

| P(LS) | Set of all distinct permutations of LS |

| |P(LS)| | Total number of distinct permutations of LS |

| P≥ τ | Set of permutations with fNC(·) ≥ τ |

| cj, βj | Constants for polynomial decay of constraint satisfaction |

3 The Principle of Narrative Realization

3.1 Formal Statement

Proposed Principle 1 (Principle of Narrative Realization): Let LS be a Fixed Lexical Set of size N derived from a source narrative S. The fundamental hypothesis of the PNR is that the rank-ordered distribution of coherence scores fNC(R) across the set of all distinct permutations P(LS) is heavy-tailed. This implies that only an exceptionally small number of permutations achieve high coherence scores, with the original narrative S occupying (or being exceedingly close to) the global maximum coherence score, a claim whose empirical investigation for full narratives would necessitate sophisticated proxies for fNC.

The total number of distinct permutations of the multiset LS is given by

|P(LS)| = N! / (∏ki=1 ni!)

(2)

This value grows super-exponentially with N. We define a threshold length N0, above which lexical specificity is hypothesized to sharply constrain grammatical recombination. For N ≥ N0 and any sufficiently high coherence threshold τ ∈ (0,1), the number of permutations |P≥ τ| is expected to be very small, potentially approaching a small constant. Consequently, the proportion of such coherent texts is conjectured to decay sharply with N, possibly following a polynomial form:

|P≥ τ| / |P(LS)| ≤ C N-α, α>0, C>0.

(3)

The parameters C and α may depend on language and text type. A further **Hypothesized Result 3.1.1** posits that the exponent α itself may be a non-decreasing function of N, implying that the proportion of coherent texts shrinks even more rapidly for longer narratives.

3.2 Justification and Constraint Interaction

The plausibility of the PNR rests on two pillars: the combinatorial immensity of the permutation space and the multiplicative filtering effect of multiple, partially independent linguistic constraints.

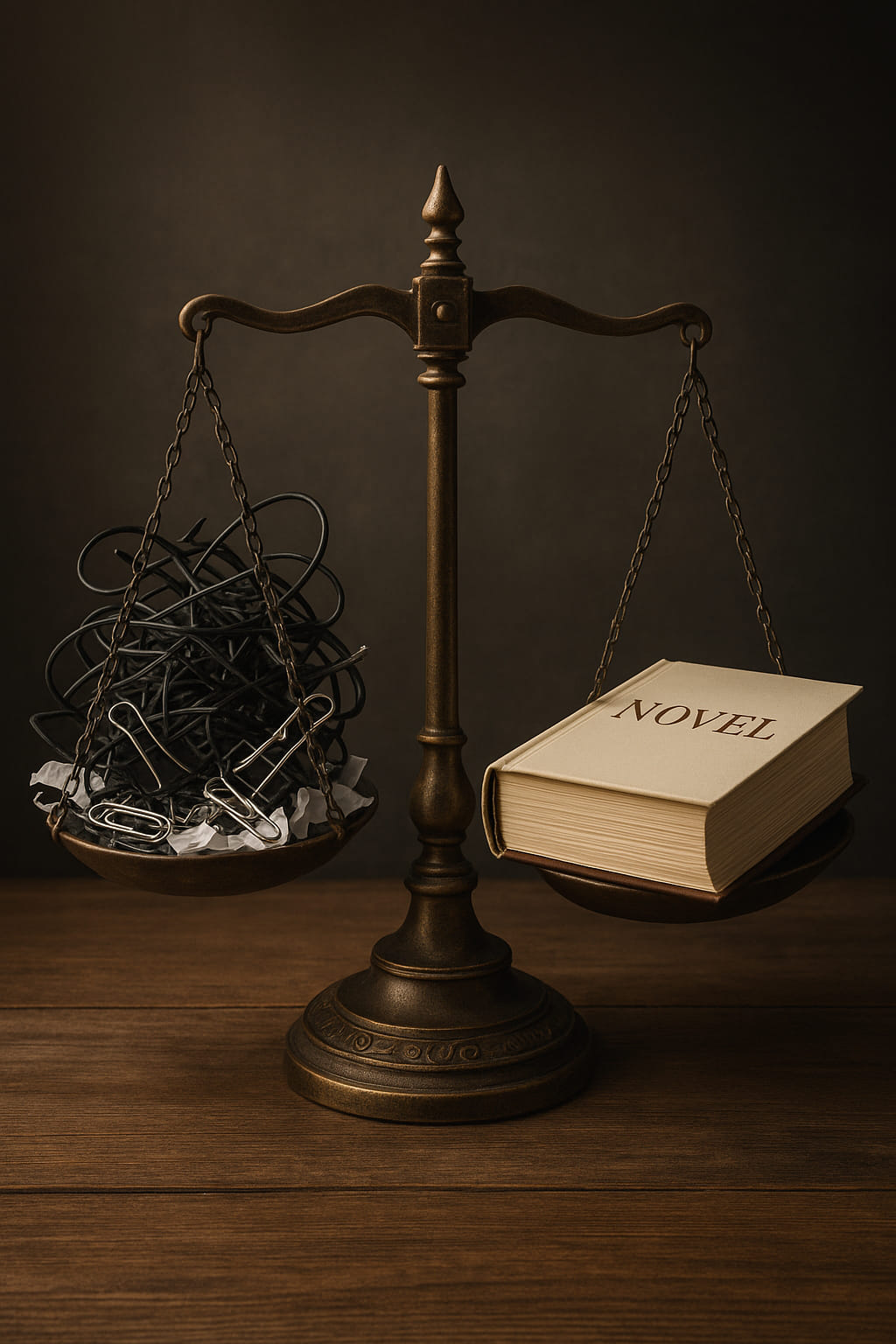

Combinatorial Immensity: The classic Infinite Monkey Theorem illustrates the statistical impossibility of producing a coherent text (e.g., a Shakespearean play) from a random selection of characters. The PNR poses a conceptually deeper problem: it grants the "monkey" a massive advantage by providing the complete and correct lexical inventory (the FLS) of such a play. The question then becomes not whether a coherent text can arise from a random alphabet, but whether permuting the exact, correct set of words can yield another equally coherent narrative. Even with this profound head-start, the PNR posits that the combinatorial space remains so vast that coherent arrangements are exceedingly rare. For a novel with N ≈ 105, the number of permutations can exceed 10150,000, rendering exhaustive search practically impossible and making a random discovery of a coherent sequence a statistical impossibility.

Multi-Layered Constraint Filtering: The numerator in Eq. (3), |P≥ τ|, is aggressively pruned by successive layers of constraints, each corresponding to a component of the coherence metric fNC:

- Syntactic Constraints: Natural-language grammars impose strict ordering rules. The fixed counts of parts-of-speech in LS severely limit the number of permutations that are even locally grammatical.

- Semantic & Referential Constraints: The challenge of satisfying these constraints is not merely a matter of local word meaning; it is a network-level constraint. The Theory of Minimal Description (TMD) posits that words exist within a structured semantic network governed by principles like Semantic Directionality and Network Connection. Each word's meaning is stabilized by its unique position and its asymmetric definitional relationships to other words. A random permutation would sever these essential, directional links, making the formation of coherent referential chains and sustained themes across the narrative a statistical impossibility. The specific inventory in LS therefore represents a highly constrained sub-graph of this network, not just a bag of words.

- Structural & Narrative Logic Constraints: A global narrative requires higher-order structures like causal event chains, consistent voice, and thematic arcs. An alternative permutation must satisfy all these global constraints simultaneously, a highly improbable outcome.

The power of the PNR comes from the hypothesis that these filters, while not perfectly independent, are not fully correlated either. This is formalized in the following assumption, which is critical for establishing the steep decay in Eq. (3):

Assumption 1 (Weak Constraint Interdependence): The filtering effects of the constraint classes are not perfectly covariant. While some correlation exists (e.g., satisfying structural constraints may aid semantic coherence), they are sufficiently independent that their joint filtering effect is far stronger than any single constraint. If Assumption 1 holds, their joint acceptance rate would be upper-bounded by a product of their individual acceptance rates, possibly adjusted for limited positive correlation. If each such individual rate decreases polynomially with N (as per Conjecture 1), their product would decrease even more steeply, supporting the form of Eq. (3). Corpus studies on lexical diversity versus syntactic flexibility could provide insights here by quantifying how tightly lexical choices constrain or enable syntactic variation, which directly informs the likely degree of (in)dependence between semantic/lexical and syntactic filtering effects.

Conjecture 1

For any constraint class j (syntactic, semantic, etc.), the fraction of

permutations satisfying it sufficiently is bounded above by a polynomial decay cj

N-βj for N ≥ N0, where βj>0. This reflects

that individual constraints are powerful but unlikely to induce exponential decay alone. Heuristically, as

N increases, the number of ways to satisfy a specific constraint type relative to the total number of

permutations decreases due to increasingly complex interdependencies over longer spans. Polynomial decay is

thus a suitable model for this substantial but not total filtering effect.

Proving Conjecture 1 and rigorously quantifying the interdependence in Assumption 1 are significant challenges for future research (Section 6.3).

3.3 Consequences and the Coherence Horizon

- Vanishing Alternative Coherence: The heavy-tailed distribution implies that for N ≥ N0, the absolute number of highly coherent permutations consists primarily of S and its trivial variants.

- Coherence Horizon: The threshold N0 marks the length at which the combinatorial space becomes so over-constrained that nearly all alternative permutations fall below a viable coherence threshold. Below N0, multiple distinct coherent permutations may be possible; above it, they become vanishingly rare.

3.4 Random-Bag Baseline: The Necessity of Inventory

To isolate the effect of permutation, we first establish that the lexical inventory itself is necessary. Let B be a "bag" of N words drawn randomly from a unigram distribution. The probability that any permutation of B can form a coherent narrative approaches zero as N increases.

Proposition 0 (Inventory-Necessity Claim): For narrative-length N≫1, Pr[∃π∈P(B) s.t. fNC(π)≥τ] → 0 as N→∞.

Sketch of justification. Random draws almost surely yield unsuitable ratios of parts-of-speech, insufficient repeated entities for referential chains, and other defects that preclude coherence. The number of distinct lexical bags of size exactly N over a vocabulary V (i.e., multisets of N tokens) grows, for fixed |V|>1 and large N, as a polynomial in N of degree |V|-1 (specifically, it is given by the binomial coefficient (N+|V|-1|V|-1), which is approximately N|V|-1/(|V|-1)!). This vastly outpaces any increase in 'interpretable' bags, assuming that the subset of 'interpretable' bags satisfying minimal lexical requirements does not grow at a rate comparable to the total number of possible lexical bags. Thus, the probability of a random bag meeting even minimal inventory requirements for coherence decays sharply with N. This highlights that having the correct inventory (the FLS) is a prerequisite for coherence. The PNR addresses the subsequent, deeper challenge of arranging that inventory correctly.

Universe 00110000

4 Implications

4.1 Information Theory

Coherent narratives are low-entropy states. The probability of randomly selecting S from its permutation space is PS = 1/|P(LS)|, implying high Shannon information I(S) = -log2 PS. The PNR further proposes that coherence itself is a high-information property, as the set of coherent texts P≥ τ is a tiny fraction of the total space.

4.2 Computational Linguistics

The PNR frames the challenge of long-form generation for large language models: a model must navigate a vast space where almost every path deviates from the narrow manifold of globally coherent sequences. This underscores the difficulty of maintaining global coherence.

4.3 Literary Theory

An author’s lexical choices (LS) constitute a narrative "fingerprint." The PNR formalizes how these micro-level decisions constrain macro-level possibilities. This 'fingerprint' is more than just a list of words; it represents a specific, structured sub-graph of the language's total semantic network, as described by the Theory of Minimal Description (TMD). This reinforces notions of authorial style and the deep structure that emerges from a Fixed Lexical Set, an inventory whose component words and their interrelations are already highly constrained by the principles of TMD.

4.4 Creativity and Constraint

Human creativity often thrives under partial constraints. The PNR explores near-total constraint: using all and only the original words. The principle suggests that as narrative length N increases, the creative latitude to form alternative, equally coherent full narratives from the same set of words collapses.

4.5 Synergy with Predictive Landscape Semantics

The Principle of Narrative Realization (PNR) resonates deeply with theories of meaning that foreground predictive processing, such as Predictive Landscape Semantics (PLS). PLS defines information as a physically instantiated, substrate-independent pattern with the potential to enable a system to improve predictive accuracy. Meaning is the quantifiable improvement (ΔQ) in a receiver's predictive accuracy from processing such input. Incoherent permutations of a text's Fixed Lexical Set (LS) would likely yield ΔQ ≤ 0, rendering them meaningless or even costly to process, incurring a high Signal Cost (SC) for minimal or negative Meaning Potential (MP).

A coherent narrative S, in contrast, is a sequence optimized to deliver a maximal ΔQ. Its components—syntactic, semantic, referential, structural, and pragmatic—all serve to facilitate the efficient construction of a robust predictive model in the reader's mind. The rarity of high-coherence texts posited by the PNR is thus equivalent to the rarity of high-ΔQ sequences, framing narrative coherence as a functional imperative for efficient knowledge transfer.

- Coherent Narratives as Efficient Prediction Enhancers: A coherent narrative S is a specific sequence that allows a reader to efficiently build a robust predictive model. Each component of the fNC metric directly facilitates this predictive improvement. For instance, NCsyn ensures basic parsability, while NCstr provides plot arcs that guide higher-level predictions.

- Rarity and Value: The extreme rarity of such high-ΔQ sequences implies that coherent narratives are high-value informational structures. They offer a substantial "return on investment" in processing effort because the Signal Cost (SC) of reading is offset by a large gain in predictive understanding (high Meaning Potential, MP).

The synergy between PNR and PLS thus paints a picture where narrative coherence is not an arbitrary aesthetic preference but a functional imperative related to the efficient acquisition of predictive power. The specific lexical configuration of an original narrative S is valuable precisely because it is one of the vanishingly few permutations of LS that unlocks this predictive potential for a reader.

4.6 Geometric Manifestations of Coherence

The rarity of coherent narratives may manifest in the geometry of their trajectories through a semantic vector space. Let E be a function embedding textual units (e.g., sentences) into vectors in ℝd. A narrative R thus forms a trajectory T(R) = ⟨v1, …, vM⟩ in this space. This perspective draws a parallel to physical resonance phenomena like cymatics, where only specific resonant frequencies induce stable, ordered geometric patterns. Similarly, coherent narratives may represent stable "resonant modes" of semantic progression, while the vast majority of permutations correspond to non-resonant "noise."

4.6.1 Hypothesized Geometric Correlates of Coherence

We hypothesize that certain geometric or topological features, extracted from T(R), may serve as correlates for the narrative coherence score fNC(R). Candidate features include:

- Global Smoothness/Regularity: Measures quantifying the local consistency of direction or velocity along the trajectory.

- Topological Complexity: Features derived from Topological Data Analysis (TDA), such as Betti numbers, which quantify features like connected components or loops.

- Density and Attractor Dynamics: Coherent narratives might exhibit higher local density or converge towards specific "attractor" regions in the semantic space.

4.6.2 Conjecture G: Geometric Proxy for Narrative Coherence

Conjecture G (Geometric Coherence Correspondence): There exist embedding and analysis methods such that a geometric score, fgeom(R), derived from features of the trajectory T(R), significantly correlates with the narrative coherence score fNC(R).

Coherent narratives are hypothesized to trace regular, structured paths, while incoherent permutations produce random, high-entropy trajectories. This provides a potential computationally tractable proxy for fNC.

4.6.3 Implications for PNR Validation and Future Research

If Conjecture G receives empirical support, fgeom(R) could offer a more computationally efficient proxy for fNC(R), facilitating larger-scale empirical investigations of the PNR. The PNR predicts that very few permutations will have fNC(R) ≈ fNC(S). If fgeom(R) is a valid proxy, then similarly few permutations should yield fgeom(R) ≈ fgeom(S). This could be tested by comparing the distribution of geometric scores for original narratives versus their random permutations.

4.6.4 Potential for Generative Applications: Bidirectional Realization

A compelling implication of Conjecture G is the potential for bidirectional realization: generating text by first designing purposeful abstract geometric forms. A crucial precursor involves mining this geometric landscape to identify 'coherence signatures'—recurrent geometric motifs that robustly correlate with high coherence. This could transform our understanding of narrative structure from descriptive accounts to a quantitative cartography of narrative possibility. If such signatures are found, a generative paradigm could unfold:

- Abstract Trajectory Design: An author or AI could design an abstract "shape", imbuing it with geometric properties known to correlate with coherence (e.g., smoothness, specific topological features).

- Textual Realization: This abstract trajectory would then be "decoded" into a sequence of textual units. This involves generating text whose semantic embeddings are constrained to follow the pre-defined path, while also maintaining local linguistic well-formedness.

This approach gains further theoretical support from PLS. Different "coherent shapes" might possess different degrees of Meaning Potential (MP), corresponding to the predictive value of the narratives they generate. This leads to the idea that different geometric forms might possess varying intrinsic predictive power. Some shapes might yield narratives of profound insight (high MP), while others yield predictable genre stories (lower MP). We might be able to identify and generate abstract structures predisposed to yield high-value knowledge.

Universe 00110000

5 Cross-Domain Parallels and Extensions

5.1 The Principle in Music: A Parallel Framework

The PNR finds a compelling, and potentially more empirically tractable, parallel in the domain of music, suggesting a "Principle of Musical Realization" (PMR).

5.1.1 Fixed-Note Set (FNS) and Musical Coherence

We can define a Fixed-Note Set (FNS) as the multiset of all note-events (pitch, duration, timbre, etc.) in a musical piece. A musical coherence metric, fMC, can be defined with components for harmonic, rhythmic, formal, and voice-leading coherence. For example:

fMC(Rmus) = ∑j∈{har,rhy,vc,form,orch} wj · MCj(Rmus)

Crucially, many of these musical components already possess quantitative models (e.g., geometric models of voice-leading, tonal tension models), making the PMR potentially more empirically tractable than the PNR.

5.1.2 Combinatorial Scarcity and Geometric Manifestations in Music

The combinatorial argument applies directly: the number of permutations of an FNS is astronomical. Only a tiny fraction would satisfy the joint constraints of tonality, meter, voice-leading, and form. The existence of mature geometric models in music theory provides a ready-made framework for testing a musical version of Conjecture G, where coherent musical pieces trace constrained paths in these abstract spaces. The tight coupling of constraints in music suggests the PMR might operate even more stringently than its textual counterpart.

5.2 AI-to-AI Communication via Resonant Semantic Shapes

The PNR framework, especially its geometric interpretation, suggests a future for AI-to-AI communication that transcends linear, token-based language.

5.2.1 The Efficiency Hypothesis: Shape as Message

Instead of transmitting token streams, advanced AIs might communicate by exchanging high-dimensional, resonant structures. These "shapes" would be holistically understood, with meaning encoded in their overall topology, curvature, and density within a shared latent space. Such a modality would be highly compressed, reflecting the principles of efficient information transfer explored in the Law of Compression, and allow for near-instantaneous coherence checking.

5.2.2 The Predictive Alignment Principle

Effective communication would occur if a message-shape T transmitted by AI1 is not only recognized as geometrically coherent by AI2 but also produces a significant predictive improvement (ΔQ) in AI2's world model. This can be formalized as a principle of predictive alignment: communication is successful if fgeom(A1)(T) ≈ fgeom(A2)(T) and ΔQA2(T) > ε for some threshold ε. Meaning is transferred when a geometrically coherent shape induces predictive improvement in the receiver.

5.2.3 Implications for Intersubjective AI

This model suggests that resonant semantic forms could serve as an interlingual or inter-representational bridge, allowing AIs with different internal architectures to communicate via a shared geometric understanding of coherence. Miscommunication would be measured not in token errors but in topological deviations or failures of predictive alignment.

5.3 Toward Universal Principles of Coherent Information

The parallels between narrative and music suggest the PNR may be an instance of a more general, cross-domain principle governing the structure of all meaningful information. This leads to the proposal of a unifying meta-hypothesis that extends beyond communication to the very nature of knowledge.

5.3.1 The Universality of Coherent Shape Hypothesis (UCSH)

We propose the Universality of Coherent Shape Hypothesis (UCSH): Any sufficiently coherent structure that forms a rare, low-entropy configuration within a well-defined abstract space corresponds to meaningful information for some class of interpretation-capable intelligence. This hypothesis posits that "meaning" at its most fundamental level is synonymous with "coherent form." It suggests that the deep structure of a compelling narrative, a sound scientific theory, a valid mathematical proof are all instantiations of the same principle: the realization of a rare, stable, and structured trajectory in a vast combinatorial space of possibilities.

5.3.2 Coherent Shapes Across Domains: A Research Program

The UCSH is a generative research program aimed at identifying these coherent shapes across diverse domains of knowledge. This involves defining the abstract space and the nature of the "trajectory" for each field:

- Scientific Theories: The abstract space could be a "theory space" of concepts, variables, and relational operators. A powerful theory like General Relativity would represent a remarkably smooth, self-consistent, and predictive manifold within this space, while inconsistent or ad-hoc theories would be represented as fragmented or high-entropy paths.

- Logical Arguments & Mathematical Proofs: Here, the space consists of axioms and propositions, and the "trajectory" is a sequence of valid deductive steps. A valid proof is a perfect, deterministic path from premises to conclusion, where any deviation results in a fallacy (an incoherent shape).

The goal of this research would be to develop a comparative "geometry of knowledge," studying the common topological and geometric properties of these disparate coherent forms.

5.3.3 A 'Geometry of Meaning' and a New Epistemology

If the UCSH holds, it implies the existence of a universal "geometry of meaning." This framework would provide a new, a structural epistemology, suggesting that to "understand" a field is to develop an intuitive grasp of the characteristic shapes of its coherent structures. Learning would be reframed as developing the ability to recognize, navigate, and create these structured trajectories within a given abstract space. This echoes the historical aspirations for a characteristica universalis—a universal formal language—but reimagines it not as a set of symbols, but as a universal grammar of shape and form. Investigating this geometry is a profound long-term goal for uncovering the deep architecture of meaning, reason, and intelligence itself.

6 Future Directions

The Principle of Narrative Realization opens a rich, multi-pronged research program that spans empirical validation, theoretical refinement, and the development of advanced computational models:

6.1 Empirical Validation and Metric Operationalization

A primary objective is the empirical validation of the core PNR hypothesis. This

necessitates the operationalization of a robust and reproducible narrative coherence metric,

fNC(·). This itself is a significant challenge, as it assumes that the complex,

multi-faceted quality of coherence can be meaningfully collapsed into a single scalar value. Developing this

metric will involve integrating multiple computational

measures for syntax, semantics, reference, and structure, with the parameters of the model

MQ (as defined in Eq. (1)) potentially being learned from rated corpora or determined

through extensive

sensitivity analyses. Given that exhaustive permutation of any non-trivial text is computationally

infeasible, this empirical pathway must leverage heuristic search methods, such as genetic algorithms or

simulated annealing, and constrained Monte-Carlo sampling to effectively explore the vast permutation space

and estimate the density of coherent sequences.

6.2 Investigating Boundary Conditions and Comparative Analysis

A crucial line of inquiry involves exploring the principle's boundary conditions and

parameters. The PNR's key parameters, such as the coherence horizon

(N0) and the decay exponent (α), are not expected to be universal

constants. Research should investigate how these are modulated by factors like language typology, literary

genre, and lexical diversity. Comparing the PNR's predictions against those from established alternative

frameworks, will be essential for situating the principle within the

broader landscape of discourse analysis.

6.3 Formal Proofs of Foundational Conjectures

In parallel with empirical work, a vital theoretical track is the pursuit of formal proofs for the principle's foundational conjectures. This involves moving from plausible hypotheses to mathematical certainty by rigorously proving Conjecture 1 for each class of linguistic constraint and, critically, developing a formal treatment of the interdependencies between constraints (Assumption 1). Success in this area would place the PNR on an exceptionally firm theoretical footing.

6.4 Developing Computationally Tractable Proxies and Advanced Models

Addressing the significant computational expense of evaluating a comprehensive

fNC(·) metric requires the development of computationally tractable proxy

metrics and advanced models of coherence. One of the most promising avenues is the geometric

approach outlined in Conjecture G, which seeks to find efficient geometric or topological correlates of

coherence in semantic space. It must be acknowledged that this conjecture is optimistic, assuming that

current semantic embedding models capture the relevant properties and that the resulting geometry is not

overwhelmingly noisy or complex. A more speculative but powerful direction is the formulation of coherence

using

the mathematics of fiber bundles. In such a model, a core semantic trajectory would form the "base space,"

while evolving stylistic, pragmatic, or discourse features would exist in the "fibers." Coherence could then

be elegantly cast as the problem of finding a smooth, holistic trajectory (a "section") through this total

space, providing a sophisticated framework for disentangling semantic flow from other narrative features.

These advanced models not only promise computational feasibility but also a deeper, more structured

understanding of meaning itself.

Universe 00110000

7 Conclusion

The Principle of Narrative Realization formalizes the intuition that coherent narratives are exceptionally rare configurations within the permutation space of a fixed lexical set. By hypothesizing a heavy-tailed distribution of coherence scores, it offers a theoretical explanation for the apparent uniqueness of extensive narratives. The principle's connections to predictive processing, geometric models of meaning, and its applicability to other domains like music suggest a fruitful and expansive research program. While requiring extensive empirical validation and mathematical refinement, the PNR holds significant potential to impact linguistics, artificial intelligence, and literary studies. Ultimately, this research program points toward a 'geometry of meaning,' suggesting that the deep structure of all coherent information may be governed by universal principles of form and resonance.

Appendix A: Formal Proof of a Polynomial Coherence Bound

This proof adopts a strict, non-compensatory model of coherence (A-Assumption 3) to achieve formal tractability, in contrast to the more general compensatory model defined in Eq. (1).

A.1 Probability Model

Fix a source narrative S of length N ≥ N0 and let LS be its Fixed Lexical Set. Let R be a permutation drawn uniformly at random from P(LS). For each constraint class j ∈ {syn,sem,ref,str,prag}, define an indicator variable Ij(R)=1 if NCj(R) ≥ τj (a predetermined threshold for class j), and 0 otherwise. Let I∩(R) = ∏j Ij(R). Define an overall coherence threshold τformal = ∑j wj τj. If I∩(R)=1, then fNC(R) ≥ τformal.

A.2 Key Assumptions for the Proof

A-Assumption 1 (Polynomial Acceptance Rate): For each class j, there exist constants cj>0, βj>0 such that for N ≥ N0, Pr[Ij(R)=1] ≤ cj N-βj. (This formalizes Conjecture 1).

A-Assumption 2 (Weak Conditional Independence): There exists a constant ε∈[0,1) such that Pr[I∩(R)=1] ≤ (1+ε)5 ∏j Pr[Ij(R)=1], where the exponent 5 corresponds to the number of constraint classes considered (syntactic, semantic, referential, structural, pragmatic). (This formalizes Assumption 1, allowing bounded positive correlation).

A-Assumption 3 (Coherence Equivalence): The thresholds are such that fNC(R) ≥ τformal ⟺ I∩(R)=1. (A strong, non-compensatory model for coherence used for this proof).

A.3 Main Bound

A-Lemma 1: Under A-Assumptions 1 and 2, Pr[I∩(R)=1] ≤ Cbound N-αbound, where Cbound=(1+ε)5∏cj and αbound=∑βj.

Proof: From A-Assumption 2: Pr[I∩(R)=1] ≤ (1+ε)5 ∏Pr[Ij(R)=1]. Applying A-Assumption 1 yields the result: Pr[I∩(R)=1] ≤ (1+ε)5 ∏(cjN-βj) = Cbound N-αbound.

A-Theorem 1 (Polynomial Coherence Bound): Let P≥τformal={R:fNC(R)≥τformal}. Under A-Assumptions 1-3, the proportion of such permutations is bounded by:

|P≥τformal| / |P(LS)| ≤ Cbound N-αbound

(A.1)

Proof: The proportion is Pr[fNC(R) ≥ τformal]. By A-Assumption 3, this equals Pr[I∩(R)=1]. Applying A-Lemma 1 yields the result, establishing the polynomial upper bound of Eq. (3).

A.4 Discussion of Assumptions

The proof's validity rests on its assumptions. A-Assumption 1 (Polynomial Acceptance Rate) formalizes Conjecture 1, with the exponents βj being primary targets for future empirical estimation. A-Assumption 3 (Coherence Equivalence) simplifies the model for tractability by enforcing a strict, non-compensatory definition of coherence. A-Assumption 2 (Weak Conditional Independence) is the most critical. If A-Assumption 2 were not made, one could only rely on the general inequality Pr[I∩(R)=1] ≤ minjPr[Ij(R)=1]. Given Pr[Ij(R)=1] ≤ cjN-βj from A-Assumption 1, this weaker bound implies an overall decay rate of O(N-maxj(βj)), governed effectively by the single constraint with the largest decay exponent. A-Assumption 2, by positing weak interdependence, allows for a stronger form of combined constraint, leading to a much faster decay rate characterized by O(N-∑βj). This reflects the intuition that satisfying syntactic rules, maintaining semantic sense, and building a coherent plot are related but distinct challenges, and that a permutation is unlikely to satisfy all of them by chance, even if it satisfies one. Demonstrating this weak interdependence empirically is a key challenge for validating the PNR.

Appendix B: A Toy Model for Operationalizing fNC(·)

To demonstrate the principle of calculating the composite coherence score fNC(R), this appendix operationalizes a simplified, computable model for a small-scale example. This exercise is not intended to be a definitive metric but rather to illustrate how the abstract components of fNC(·) can be grounded in concrete, quantitative proxies, making the principle falsifiable in practice.

B.1 Setup: The Sentence and its Permutation Space

Consider the source sentence S = "The cat sat on the mat".

- Fixed Lexical Set (FLS): LS = {the(2), cat(1), sat(1), on(1), mat(1)}

- Total Tokens (N): 6

- Distinct Types (k): 5

- Total Permutations |P(LS)|: 6! / 2! = 360

B.2 Defining Component Score Proxies

We define simple proxies for three of the five coherence components. Each score is normalized to [0, 1]. For this model, we ignore NCstr and NCprag, as they are less relevant for a single sentence.

1. Syntactic Coherence (NCsyn): Based on Part-of-Speech (POS) trigram validity.

- POS tags: {the: DET, cat: NOUN, sat: VERB, on: PREP, mat: NOUN}

- Valid Trigram Patterns (a predefined set): {DET-NOUN-VERB, VERB-PREP-DET, PREP-DET-NOUN}

- Scoring: Out of the four sliding POS-trigram windows in the sentence, count how many of the three predefined valid patterns (DET-NOUN-VERB, VERB-PREP-DET, PREP-DET-NOUN) occur, and divide that count by 4.

2. Semantic Coherence (NCsem): Based on propositional integrity.

- Scoring: The score is 1.0 if a NOUN token appears anywhere before the VERB token ("sat"), establishing a subject-predicate structure. Otherwise, the score is 0.0.

3. Referential Coherence (NCref): Based on the proximity of determiners (DET) to nouns (NOUN).

- Scoring: For each of the two "the" tokens, compute the number of intervening tokens between it and the nearest NOUN token (so directly adjacent yields an intervening count of 0). The score for one "the" is `1 / (1 + intervening_count)`. The total NCref score is the average of these two values.

B.3 Calculating fNC for Sample Permutations

We assign weights for the composite score: wsyn=0.5, wsem=0.4, wref=0.1. Thus, fNC(R) = 0.5·NCsyn + 0.4·NCsem + 0.1·NCref.

Table B1: Sample Coherence Score Calculations

| Permutation (R) | NCsyn | NCsem | NCref | fNC(R) |

|---|---|---|---|---|

| "The cat sat on the mat" (Original Sentence) |

0.75 (3 of 4 trigrams valid) |

1.00 ('cat' is before 'sat') |

1.00 (Both 'the's adjacent to nouns) |

0.875 |

| "The mat sat on the cat" (Semantically odd variant) |

0.75 (Identical POS structure) |

1.00 ('mat' is before 'sat') |

1.00 (Identical DET-NOUN proximity) |

0.875 |

| "Sat on the mat the cat" (Incoherent variant) |

0.50 (2 of 4 trigrams valid) |

0.00 (No noun before 'sat') |

1.00 (Both 'the's adjacent to nouns) |

0.350 |

B.4 Discussion

This toy model successfully assigns a high coherence score to the original sentence and its syntactically identical variant, while assigning a significantly lower score to an incoherent permutation. It demonstrates that the composite metric fNC(·) can create a coherence gradient that distinguishes between word orders. The weights used here (e.g., wsyn=0.5) are illustrative; determining a principled, empirically-grounded set of weights is a central challenge for the full realization of this framework. A more sophisticated implementation for longer texts would require more robust proxies (e.g., parser-based syntax scores, distributional semantics for NCsem, coreference resolution for NCref), but the core principle of combining weighted scores from different linguistic strata to quantify coherence remains the same.

Appendix C: Heavy-Tail Monte-Carlo Experiment on Bigram Preservation

To provide a concrete, empirical illustration of the heavy-tailed distribution hypothesized by the PNR, this appendix examines a simple proxy for coherence: the preservation of original bigrams in random permutations. We randomly permute the 18-token clause “To be, or not to be, that is the question: Whether 'tis nobler in the mind to suffer” ten-thousand times and count, for each permutation, how many of the original adjacent bigrams survive contiguously and in order. The distribution of these preserved-bigram scores is extremely right-skewed; the complementary cumulative distribution (CCDF) shows an approximately power-law decay, confirming heavy-tail behaviour for this illustrative proxy.

C.1 Results

The Monte Carlo simulation was run for n_trials = 10000 random shuffles of the 18‐token

excerpt. The histogram in Figure C1 shows the distribution of preserved bigrams, and

the log–log CCDF in Figure C2 reveals a heavy‐tail behavior.

Summary statistics: Mean = 1.82; 95th percentile = 4;

max observed = 8.

k = 2 to k = 6 indicates a heavy‐tail.

C.2 Python Implementation

import random, itertools, numpy as np

import matplotlib.pyplot as plt

from pathlib import Path

# ------------------------------ Setup ------------------------------ #

random.seed(42) # reproducibility

out_dir = Path('images')

out_dir.mkdir(exist_ok=True)

text = ("To be or not to be that is the question "

"Whether 'tis nobler in the mind to suffer").lower().split()

original_bigrams = set(zip(text, text[1:]))

n_trials = 10_000

# ------------------------- Monte-Carlo loop ------------------------- #

scores = []

for _ in range(n_trials):

perm = text[:]

random.shuffle(perm)

score = sum((b in original_bigrams) for b in zip(perm, perm[1:]))

scores.append(score)

scores = np.array(scores)

# ----------------------------- Plots ----------------------------- #

plt.figure(figsize=(8,5))

plt.hist(scores, bins=range(scores.max()+2), align='left',

edgecolor='black')

plt.title("Histogram of Preserved Bigrams")

plt.xlabel("k = preserved bigrams")

plt.ylabel("Frequency")

plt.xticks(range(scores.max()+1))

plt.grid(True)

plt.savefig(out_dir/'ht_histogram.png', dpi=300, bbox_inches='tight')

plt.close()

values, counts = np.unique(scores, return_counts=True)

# Calculate P(Score >= k) for each k in values

ccdf_values_for_plot = np.array([np.sum(counts[i:]) for i in range(len(values))]) / float(n_trials)

plt.figure(figsize=(8,5))

plt.loglog(values, ccdf_values_for_plot, marker='o') # Use the corrected ccdf values

plt.title("CCDF of Preserved Bigrams")

plt.xlabel("k (preserved bigrams)")

plt.ylabel("P(score ≥ k)")

plt.grid(which='both')

plt.savefig(out_dir/'ht_ccdf.png', dpi=300, bbox_inches='tight')

plt.close()

# ------------------------- Summary stats ------------------------- #

print(f"Mean = {scores.mean():.2f}; 95th pct = {np.percentile(scores,95):.0f}; "

f"max observed = {scores.max()}")